A web scraper is a software that can scan

unstructured data sources such as documents,

websites, audio or video files, extract data, and

convert it to structured data such as tables with

fields and records in a database.

It uses Artificial Intelligence (Natural Language

Processing) to find, identify and tag data.

How can we use a Scraper?

-

Find data.

-

Structure unstructured data.

-

Collect product & price information.

-

Collect competitive information.

-

Collect consumer opinions & sentiments.

-

Understand what our digital reputation is.

-

Monitor public information (e.g., stocks, etc.)

Scrapers are different from database queries in that the database queries have direct access to the data in the databases (e.g., can access the database with right username, passwords, etc.), while scrapers don’t have such access and instead need to analyze the document or website and decode this visual information (for example the rendering of the website) to then map/identify the data elements that we are looking for to then extract them and place them in another format, location or actual database.

Scrapers come in multiple flavors. But the source of the information is what makes it unique:

-

Web Scraper. These scrapers collect information from websites

-

Document Scraper. These scrapers collect information from documents (e.g., PDF, Word, Text, CSV and others).

-

Audio/Video Scraper. These scrapers collect information from multi-media (e.g., YouTube, MP4 file, MP3 file and other audio/video file formats).

ADDITIONAL SERVICES

An even more advanced scraper can open a list of websites and for each website in the list, convert it to text, to then map and identify data elements to then store or write these data elements in other formats or databases.

The deliverable is a fully functional scraper that runs in the designated computer & operating system that can open the file repository & create the output.

Contact our sales team

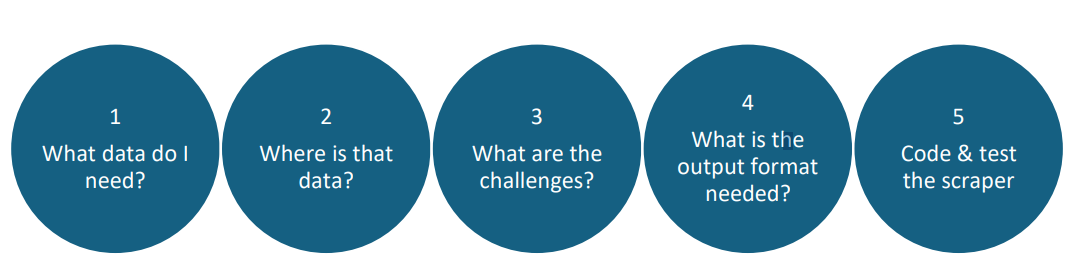

-

Need – clearly define the data that is needed, why, how often is needed.

-

Source – clearly define the sources of information to be analyzed (for example list the websites to be scraped). Identify how often these sources change, how reliable they are, etc

-

Challenges – clearly define the challenges to overcome when scraping data from sources; for example if it is an encrypted file, the encryption passwords and algorithms to be used, or if it is websites with security such as captcha or other more sophisticated anti-scraping technologies, to note and document them in detail so that they can be considered for the solution development, or discarded from the project if they can’t be overcome.

-

Output – clearly define the output format of the data to be scraped – typically is a structured table of data, but it can be other ways. Is key to document so that this can be met during the coding and testing phases.

-

Coding & testing – this is when the actual scraper is built considering all the previous steps. Testing is most critical as reliability is one of the most difficult aspects of a scraper.

-

Purpose includes why the scraper is needed, what is the bigger business target and success criteria.

-

Sources include all the places where the scraper needs to look for information, be it video files, websites, documents or otherwise. Also, if these are fixed, or variable and how they vary – for example how the name varies over time.

-

Destination includes all the specifications of the data OUTPUT; what format, and if it is a table, what is the definition of each record (which fields), length, how many records, etc.

-

Specific functionality includes any/all calculations to be done post-scraping, for example data quality assurance, classification, segmentation, clustering, binning and/or any other grouping or classification of data.

-

Time is how often the scraper needs to run its process and when each system or component needs to execute and interact with each other to make sure all systems are well synchronized.

A: Scraping is always done in publicly available information and/or websites. SentientInfo does not support conducting scraping of protected documents or information that has any type of legal protection, intellectual property or even public websites that warn scrapers and robots from scraping. All our scraping is ethical and we can advise you what alternatives you have.

A: No. We’ve done many and while it may seem plausible to create a “generic” scraper, we have observed that websites are often dynamic and even factors such as spelling can seriously affect the performance of a scraper. All our scrapers are ad-hoc.

A: It depends on the complexity of the scraper, the number, availability and complexity of the data sources and the complexity of the output as well as the amount of data processing to be done on the scraped data. It also depends on how often the data sources change and how dynamic the scraping environment is. In general, a small scraper (for example single website with few data points, that changes less than once a year) could be as cheap as $2,500 USD while a highly complex scraper with tons of websites that are highly variable and dynamic, and several output formats with tons of classification and postprocessing could be several tens of thousands of dollars.

A: It depends on the complexities of the sources, data to be identified, outputs, etc. In general, small scrapers can be developed in a few days, while large scrapers can take up to a few months.

A: We have the capacity to code ourselves so to start your project will not end up in a strange software house in India where 20 developers and their ‘cousins’ are calling you with the same questions over and over but no results. In addition to our technical capability, we have tons of experience overcoming anti-scraping technologies such as Captcha, session time detection, IP detection and others. We can fragment queries into multiple queries from diverse IP addresses masking the query so anti-scraping websites can’t detect, and then join the answer parts into a single answer and creating the output with zero issues. And we can do tons of post-processing on the data, from general hygiene to advanced classification, clustering, and ad-hoc calculations.