Natural Language Processing is a part of Artificial Intelligence, that involves algorithms that are trained to recognize text and speech and can perform multiple tasks with this, such as entity recognition, translation, conversation (chat), text summarization, and other more advanced uses of text such as command recognition and execution.

NLP can be used to provide interfaces that enable humans to interact using language just as they would interact if they were with other humans.

This can be used for example to create a ChatBot that can interact with a user to provide information, answer questions, provide order status and customer service in general. But it can be deeper, for example systems can be made to process a large amount of text and provide a summary of such text, or identify the Entities (noun, verb, adjective, adverb, etc.) of the text, for further processing (for example identify and execute commands), and can also be used to translate text in one language to another language.

How can we use Natural Language Processing Models?

-

Information extraction.

-

Speech Transcription.

-

Language Translation.

-

ChatBots.

-

Diagnostics (Healthcare, business).

-

Legal document analysis.

-

Energy consumption analysis.

-

Resume screening.

-

Document / news summarization.

-

Knowledge base creation & maintenance.

-

Text-to-speech.

-

Speech-to-text.

-

Sentiment / psychological analysis.

There are many models and approaches, however the ones we focus on include the following:

-

Key Word Recognition. This is a very old method that identifies key words (or key lemmas) within a given text and based on those can react and/or provide a response. It lacks full understanding and context understanding so accuracy is very low, but in limited times can provide a fast and economical solution.

-

Markov Chains & Artificial Neural Networks. These are more modern systems that can provide a better performance from Key Word Recognition. These systems require an encoding step to convert the text to numbers so the artificial neural network can process the data. It lacks context understanding and it performs better given good training and can work great for many applications.

-

Recurrent Neural Networks & LSTM. These are improved neural network that cannot just perform back-propagation but forward-propagation and improve the accuracy of the predictions. They work like artificial neural networks and in some cases can improve on the performance. However, they also lack full context understanding although they provide a short-term memory that provides partial context understanding. They do require more computing power for training.

-

Transformers & LLMs. The concept of the transformer pursues full context understanding by means of several encoders and/or decoders and the inclusion of the “attention” concept. These models are often called Large Language Models (LLMs) and most of them are based on Google’s BERT model (Bidirectional Encoder Representations from Transformers) or variations of it.

ADDITIONAL SERVICES

Text Processing can take many shapes and can also be connected with other components, such as databases, APIs, control systems. We can help you connect your various systems and/or components.

Contact our sales team

-

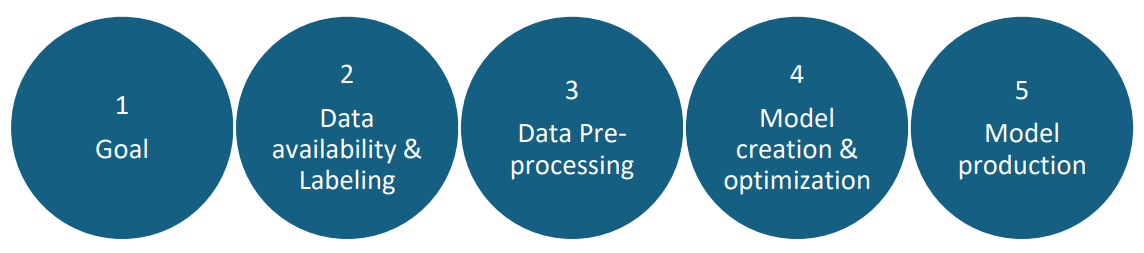

Goal – what are we trying to achieve and why?

-

Data availability & labeling – what data do I have available? Is that data enough to describe the goal? Do I have the data labeled or can I label the data so I can proceed with the training of the model?

-

Data Pre-processing. Is the data quality fit for purpose? What are the early conclusions driven by the exploratory data analysis? Should we include all features? Do we need to pre-process data (scale, transform, encode, etc.)?

-

Model creation. Code the NLP model. In some cases, the solution can incorporate multiple models or even already-made models.

-

Model production. Once we have a solid training model, create an application that uses this model for the required application. This can be done as a widget to perform conversation with users or more sophisticated interactions.

-

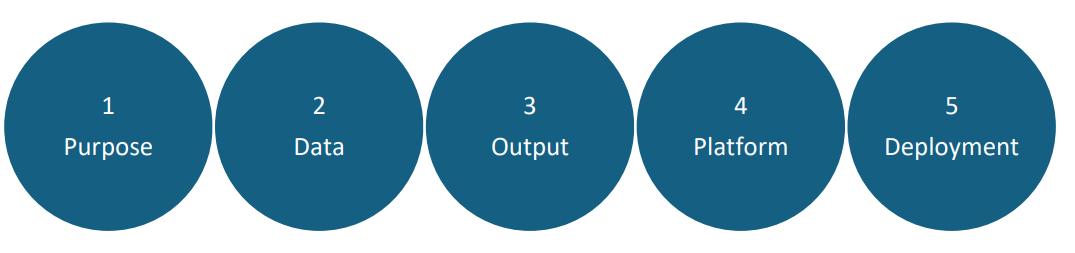

Purpose relates to the objectives that the company has related to the business problem they want to resolve using the NLP model. For example, the type of interaction or conversation expected, or functionality (e.g., summarization).

-

Data relates to the available data, labeling of the data, quality of the data and preprocessing of the data so it can be used for NLP modeling.

-

Output relates to the way in which the solution will request information and provide a result (for example a text or document entry and a display of a result), as well as the performance indicators used for the success criteria (for example, Recall, Precision, F1, Accuracy, etc.).

-

Platform relates to the requirements of the application that will put the NLP model into production (once trained).

-

Deployment relates to how to deploy and maintain this NLP model solution within the company and circle back to the Purpose.

A: NLP models enable you to convert unstructured data (data that is in text) into structured data (in a database). Converting it to structured data enables you to query the data, to run statistical and other types of analysis, and also visualize the data (like display the data in a dashboard to analyze trends, etc.).

A: Many reasons:

-

A proprietary model gives you full control over the scope & functionality.

-

A proprietary model gives you full control over security & privacy.

-

A proprietary model gives you a better opportunity to connect to other systems, other databases and integrate solutions better.

-

A proprietary model costs one time, whereas publicly available systems such as ChatGPT have a cost that may be cheap now but over time can raise to be expensive.

A: It does depend on the specific scope, application, connectivity and complexity overall; however, the NLP models can re-use a lot of existing code and build on existing already trained solutions which can make it more affordable and cost effective.

A: It does depend on the specific scope, application, connectivity, and complexity overall; simple NLP models can be deployed in a few days, while highly complex systems may take a few weeks to be deployed.

A: SentientInfo has proprietary NLP Models including its own LLM which can be trained and configured for many applications. We can re-deploy and customize our own code quickly and cost effectively.

Another benefit is that we have been users of such models in large corporations and understand the complexities of working with large companies, manage multiple stakeholders and deal with corporate politics to achieve superior results. So, in summary: we know our stuff, we are lean, and we know how to do it technically and teamwork with corporation teams including adapting to local culture to achieve superior results.